Image Analysis Software With 3D Scene Reconstruction: Should You Build It By Your Own?

If you develop virtual finishing or furniture try-on, or an interior design software, or a solution for the real estate sector, you likely consider an implementation of an image analysis functionality. And this leads to the question: should you do it by your own efforts or find an out-of-the-box software? What option will match your case the most?

In this article, you will learn what expertise, tech equipment, and scope of work are needed to build an image analysis software with 3D scene reconstruction.

Required expertise to develop an image analyzer with semantic 3D reconstruction

Analysis of an interior image has its specifics: the picture may include mirrors, tight spaces, or heavy clutter. Also, photos can be taken with different focal lengths, fields of view, and camera height.

To address these challenges from scratch, you need to have expertise across multiple disciplines, including deep learning, computer vision, computational geometry, and dataset engineering.

For example, it involves hybrid AI and classical methods: the combination of deep learning for detection and segmentation, and algorithms of 3D graphics.

Tech equipment needed for image analysis with 3D Reconstruction AI

There are two ways to implement software for the analysis of interior images:

- with specialized hardware;

- device-agnostic.

Here are some points to compare these approaches:

Aspect

| Equipment-based approach (LiDAR, Stereo Cameras, AR Sensors)

| Device-agnostic approach (Deep Learning, 3D Graphics-Based Ray Tracing)

|

Nature

| Relies on physical sensors capturing raw environmental data

| Uses algorithms and models to interpret or simulate data independent of hardware

|

Data acquisition

| Direct measurement of depth, distance, and spatial info via lasers, cameras, or sensors

| Generates or processes data via learned models or rendering techniques

|

Accuracy & precision

| LiDAR: Very high accuracy with cm to mm-level precision; considered ground truth in many benchmarks Stereo Cameras: Moderate accuracy; improved with advanced algorithms but generally less precise than LiDAR, especially at longer distances or low-texture surfaces AR Sensors: Variable precision depending on sensor type; often less accurate than LiDAR and stereo cameras | Deep learning: Does not directly measure physical distances; accuracy depends on training data and model generalization. Can infer depth or features but is inherently approximate and probabilistic Ray tracing: Enables to calculate 3D model of the objects on the image

|

Environmental dependency

| Performance depends on lighting, weather, surface reflectivity (e.g., LiDAR affected by fog, stereo cameras by lighting)

| Less sensitive to physical conditions; simulations and models operate under controlled assumptions

|

Cost & hardware

| High for LiDAR, moderate for stereo cameras, variable for AR sensors

| Lower hardware cost but requires powerful computation (GPUs/TPUs) for training and inference

|

Flexibility & adaptability

| Limited to sensor capabilities; fixed by hardware design

| Highly adaptable; deep learning models can learn from diverse data

|

Real-time capability

| LiDAR and stereo cameras can provide real-time data; AR sensors vary

| Real-time deep learning inference possible

|

Integration & fusion

| Often combined (e.g., LiDAR + cameras) for robust sensing

| Can integrate multi-source data virtually; deep learning fuses modalities; ray tracing used for defining the geometry of objects in an image

|

Limitations

| Sensor cost, environmental sensitivity, hardware maintenance

| Requires large datasets, high computational resources, potential generalization issues

|

Implementation scope for image analysis and 3D reconstruction using deep learning

Here we focus on a device-agnostic approach, as for interior image analysis it is the most cost-effective way.

In this case, you need to invest heavily in creating a custom dataset, as public ones are often poor for real-world applications. You will collect, annotate, and optimize data to train a model and get accurate results of image analysis.

You also should consider a multi-step AI pipeline to make your model much more adaptable to different real-world scenarios. To achieve this, you will combine multiple techniques – segmentation, keypoint detection, and 3D reconstruction using deep learning – into a unified pipeline.

Overall, to reach a high level of system accuracy and robustness, you need to be ready for a significant time contribution in R&D – months or even years.

Operational costs for building a software with 3D Scene Reconstruction

In the previous section, we’ve learned about time investments, which, of course, affect the costs of the development cycle. Are there any additional expenses to keep in mind?

Relying on our experience, we should mention:

- Involvement of a dedicated machine learning team – all who will integrate multiple neural networks, prepare the data, and train models.

- Leveraging substantial computing resources. For interior image analysis software, you need a powerful and, accordingly, expensive graphics processing unit.

Is there any out-of-the-box software available?

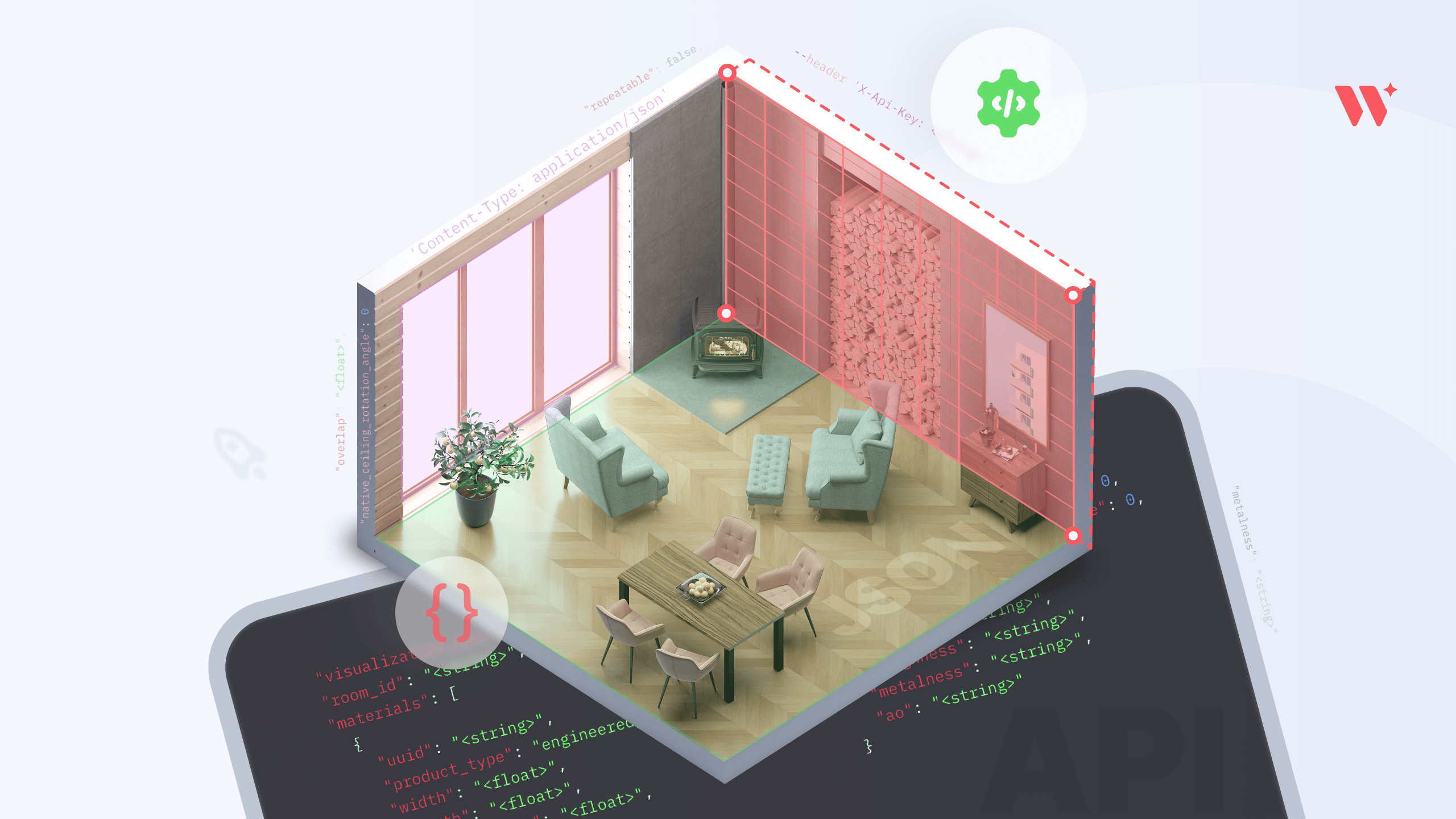

In Wizart, we have solved all the problems described above for you! We developed Vision API – a software solution designed specially for engineers.

Using techniques such as segmentation, detection, and 3D reconstruction AI, we created an image analyzer for interior pictures. Our system takes into account variety of lighting conditions, angles, distortions, and layouts without relying on external context.

On the output, we obtain data describing the geometry of the room on a picture, proportions of objects, including wall placement, window openings, ceilings, and floors.

The result is given as a JSON file that contains the coordinates of elements.

You can use this data for further calculations: for example, if you build software visualizing finishing materials or furniture in an interior image.

What path will you choose?

As you see, creating image analysis software with semantic 3D reconstruction is a non-trivial task that requires a long and resource-intensive development cycle. For a product aiming to achieve a high level of accuracy and robustness of the system, years of R&D are needed, as well as substantial computing resources and a dedicated machine learning team.

By integrating Wizart Vision API, you can bypass these challenges and implement interior analysis technology immediately, saving time, cost, and effort.